Using supercomputers for efficient numerical simulations, such as reservoir simulations, is a highly non-trivial topic. Achieving high-performance computing (HPC) requires handling at least three challenges as follows:

-

- The overall computational work must be appropriately partitioned into pieces, which is the first step for using supercomputers because they are built upon the principle of parallel processing.

- The chosen numerical algorithm for a reservoir simulation to run on a supercomputer must be parallelizable and, moreover, scalable with an increasing number of processing units.

- The software implementation must be able to effectively utilize the capabilities of the processing units and the interconnect between them on a supercomputer.

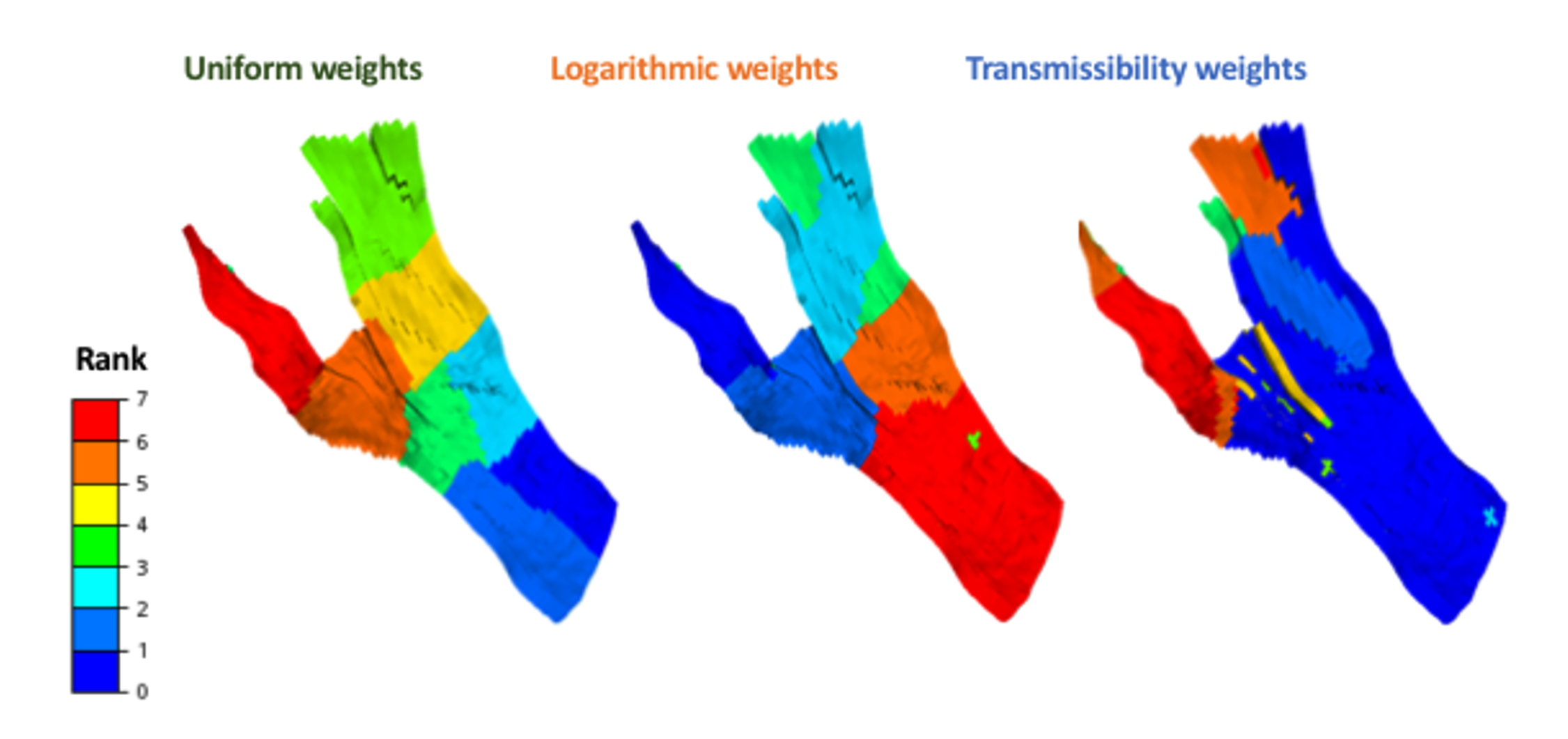

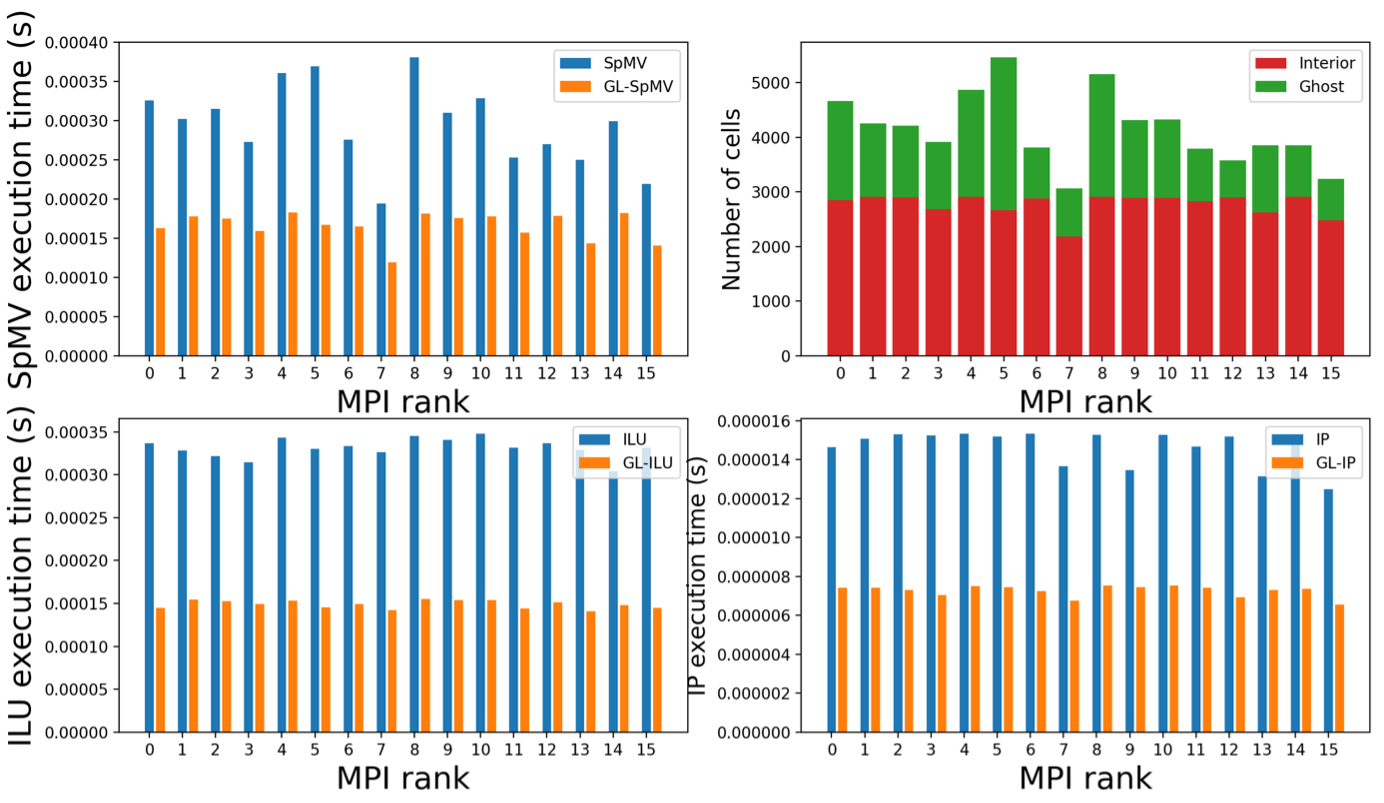

For reservoir simulations, in particular, the above three challenges are intermingled together. First, the underground reservoirs are in general of irregular 3D shapes, thus unstructured computational meshes must be adopted to resolve the geometric irregularities. Second, partitioning unstructured meshes alone (without considering the actual computations to be executed on top of those) is already a demanding task. The added difficulty for reservoir simulations is that advanced numerical strategies are required to solve the involved mathematical equations. These complex algorithms, when parallelized, may require a different goal for the mesh partitioning task. For example, the mesh entities that are strongly coupled numerically will benefit from being assigned to the same partition, instead of being divided among multiple partitions. Therefore, the mesh partitioning task related to parallel reservoir simulations must balance between the standard partitioning criteria and the resulting effectiveness of the parallelized numerical algorithm. To make the situation even more challenging, the actual utilization of a parallel computer for reservoir simulations is also dependent on the hardware details, as well as the specific reservoir case at hand.

The goals of this SIRIUS project are therefore:

-

- To investigate the necessary balance between the standard criteria for partitioning unstructured meshes and the reservoir-specific criteria that are important for the parallelized numerical algorithm. Based on this investigation, we want to extend the standard mesh partitioning problem;

- To ensure a high level of performance of parallel reservoir simulations by avoiding non-contributing computations that can arise specifically from parallelization;

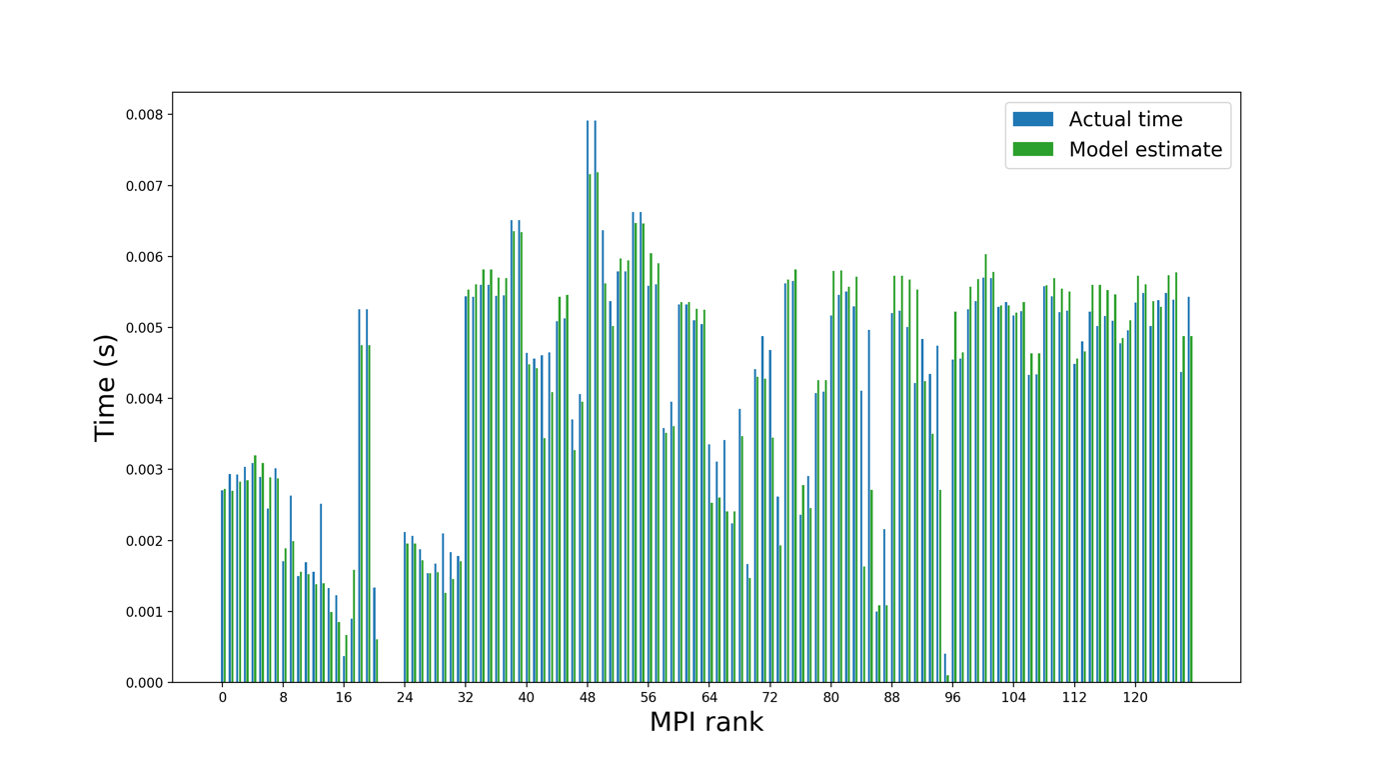

- To obtain a quantitative understanding of the communication overhead that is associated with any parallel implementation of a reservoir simulation, for the possibility of a better mapping of the different pieces to the actual hardware processing units;

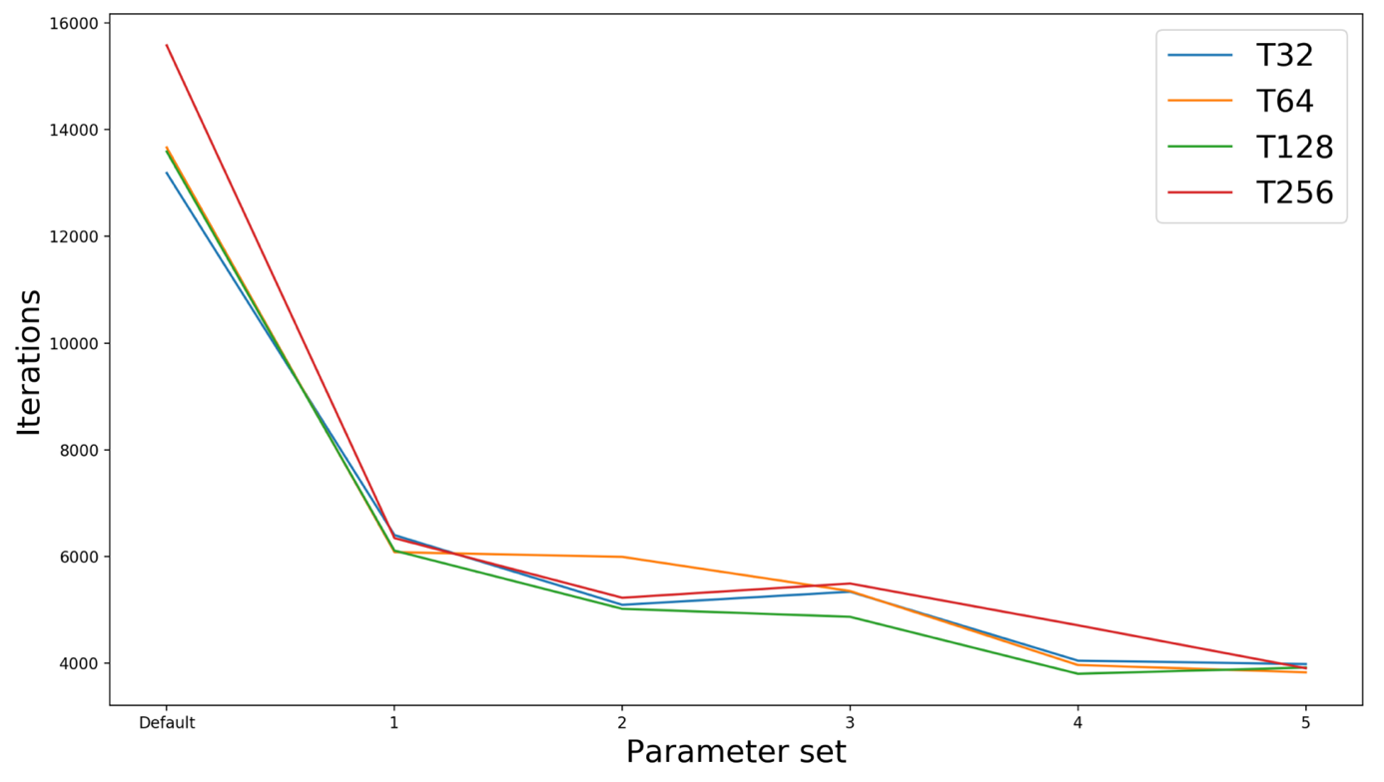

- To avoid unnecessary waste of the computational effort by automatically identifying good choices of the inherent parameters of the numerical algorithm, so that accuracy is maintained while the numerical algorithm progresses faster than when less optimal parameters are used.