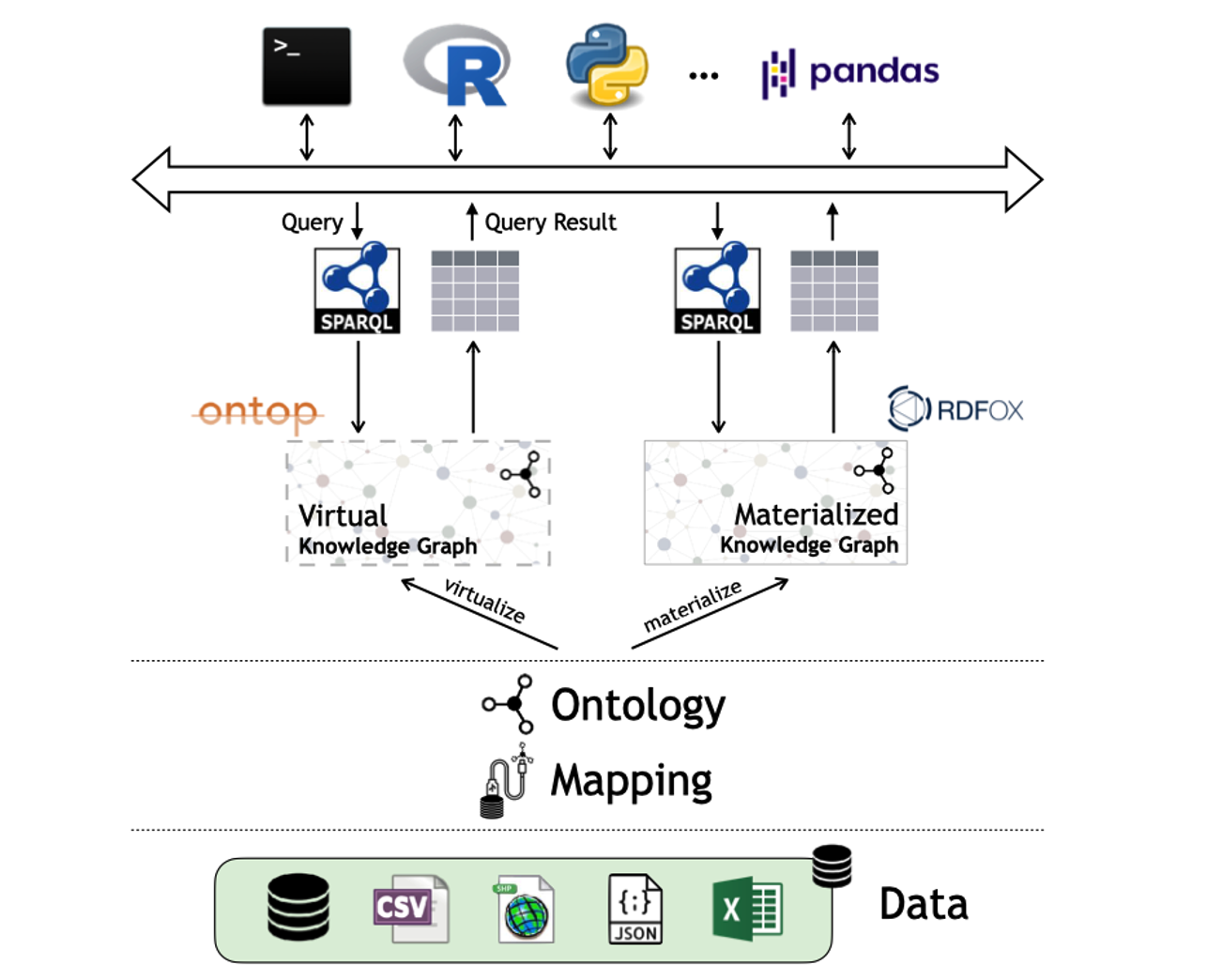

Data within the oil and gas domain typically resides in several different sources and can have vastly different forms and access methods. In order to ensure optimal decision making all of this data must be taken into account; an end-user needs to be able to view and understand all data.

Accessing the data in their legacy format requires in-depth, low-level knowledge of how the data is stored, which is a considerable challenge for end-users. By integrating all data under a common ontology, users can view and explore the data in a language they understand. This research program aims at addressing issues that come up during this integration process, in particular by designing and developing scalable infrastructure for the integration of multiple large datasets and large-scale ontologies.