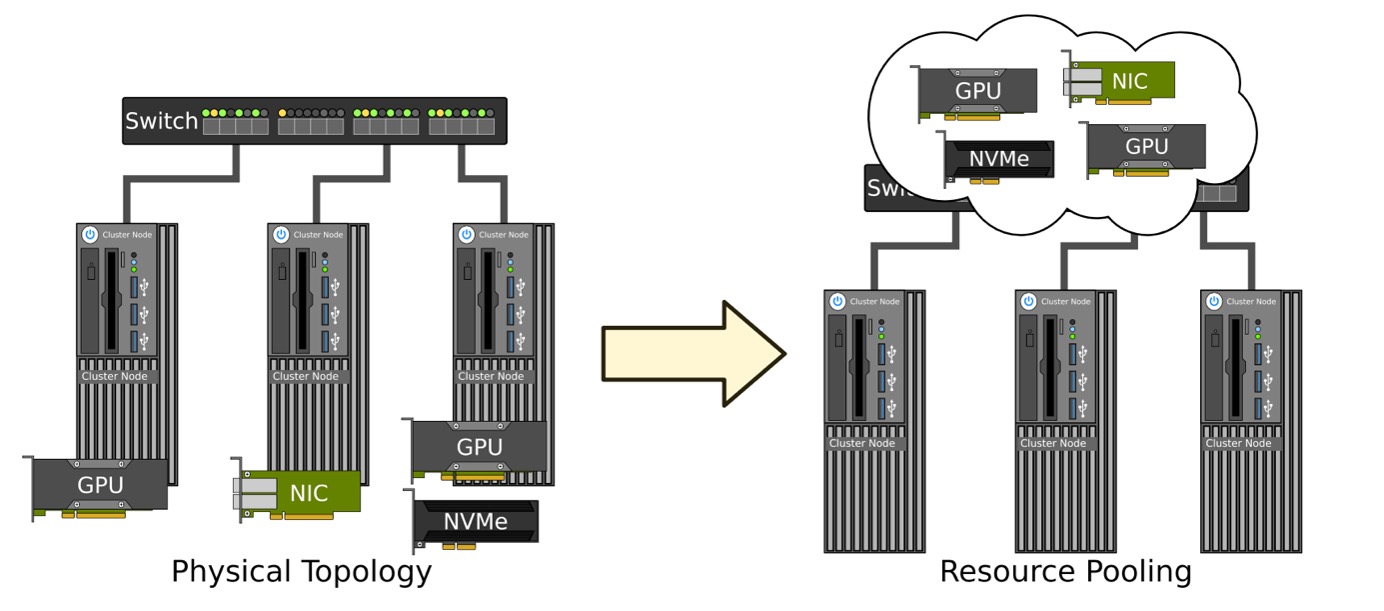

Distributed and parallel computing applications are becoming increasingly compute-heavy and data-driven, accelerating the need for disaggregation solutions that enable sharing of I/O resources between networked machines. For example, in a heterogeneous computing cluster, different machines may have different devices available to them, but distributing I/O resources in a way that maximizes both resource utilization and overall cluster performance is a challenge. To facilitate device sharing and memory disaggregation among machines connected using PCIe non-transparent bridges, we present SmartIO. SmartIO makes all machines in the cluster, including their internal devices and memory, part of a common PCIe domain. By leveraging the memory mapping capabilities of non-transparent bridges, remote resources may be used directly, as if these resources were local to the machines using them. Whether devices are local or remote is made transparent by SmartIO. NVMes, GPUs, FPGAs, NICs, and any other PCIe device can be dynamically shared with and distributed to remote machines, and it is even possible to disaggregate devices and memory, in order to share component parts with multiple machines at the same time. Software is entirely removed from the performance-critical path, allowing remote resources to be used with native PCIe performance. To demonstrate that SmartIO is an efficient solution, we have performed a comprehensive evaluation consisting of a wide range of performance experiments, including both synthetic benchmarks and realistic, large-scale workloads. Our experimental results show that remote resources can be used without any performance overhead compared to using local resources, in terms of throughput and latency. Thus, compared to existing disaggregation solutions, SmartIO provides more efficient, low-cost resource sharing, increasing the overall system performance and resource utilization.

SmartIO: Device sharing and memory disaggregation in PCIe clusters using non-transparent bridging

Introduction

Cluster computing applications often have high requirements to I/O performance. For example, many computing clusters rely on compute accelerators, such as graphics processing units (GPUs) and field-programmable gate arrays (FPGAs), to increase the processing speed. In recent years, we have also seen a convergence of the high-performance computing, big data, and machine learning research fields. This development has led to new demands to I/O performance where fast access to high-volume storage devices is becoming a requirement for high-performance computing, while low latency networking and making use of compute accelerators have become cloud computing issues [8, 52, 55]. If I/O resources (devices) are scarcely distributed in the cluster, cluster machines with I/O resources may become bottlenecks, for example when a workload requires heavy computation on GPUs or fast access to storage. Contrarily, over-provisioning machines with resources may lead to devices becoming underutilized if a workload’s I/O demands are more sporadic. Distributed processing workloads may even require a heterogeneous cluster design, with widely different compositions of devices and memory resources for individual machines in the cluster. Being able to share and partition devices between cluster machines at run-time leads to more efficient utilization, as individual machines may dynamically scale up or down I/O resources based on current workload requirements.

In order to meet the latency and throughput requirements of data-driven and compute-heavy workloads, there is a need for flexible, yet efficient, sharing of I/O resources in computing clusters. This dissertation contributes to this goal by presenting a solution that enables distributing devices and sharing memory resources between machines interconnected with Peripheral Component Interconnect Express (PCIe) [42]. By leveraging memory mapping functionality supported by the PCIe networking hardware, we make it possible to use resources residing in remote machines as if they were installed in the same machine. Whether resources are local or remote is made transparent to application software, operating system (OS), and even device drivers, and remote resources can be used in a manner that is indistinguishable from using resources attached to the local PCIe bus. Existing device drivers and application software may use remote resources without requiring any adaptations. Not only does this make it easier to increase the overall resource utilization in the cluster, but it also becomes easier to design and implement distributed applications as software no longer needs to be written with accessing remote resources in mind, but can instead be implemented as if all resources are local. Using our solution, I/O resources are no longer locked to individual machines, and can instead be shared freely with other machines in the cluster.

Results

Cluster computing and other distributed applications often need to process large amounts of data, leading to high performance requirements to I/O resources, such as flash storage and graphics cards. However, while such resources are now common in individual computer systems, distributing them across machines in a cluster in a way that maximizes both performance and resource utilization is a challenge.

Our SmartIO solution enable computers in a computing cluster to share their I/O resources with each other. Machines can lend out their internal devices and borrow remote resources from other machines. By using a special network adapter called a Non-Transparent Bridge, SmartIO memory maps remote resources for a local system. Software can be written as if all resources are local, greatly reducing programming complexity compared to existing sharing solutions, which require distributed programming paradigms. In other words, resource sharing becomes easier with SmartIO. Our thorough performance evaluation proves that devices can be shared and used with minimal performance overhead using our SmartIO solution, increasing the overall resource utilization in the cluster. For more information read: https://www.simula.no/publications/smartio-device-sharing-and-memory-disaggregation-pcie-clusters-using-non-transparent

Selected Publications

- Lars Bjørlykke Kristiansen, Jonas Markussen, Håkon Kvale Stensland, Michael Riegler, Hugo Kohmann, Friedrich Seifert, Roy Nordstrøm, Carsten Griwodz, and Pål Halvorsen. “Device Lending in PCI Express Networks.” In: Proceedings of the 26th ACM International Workshop on Network and Operating Systems Support for Digital Audio and Video. NOSSDAV’16. May 2016, 10:1–10:6. doi: 10.1145/2910642.2910650

- Konstantin Pogorelov, Michael Riegler, Jonas Markussen, Håkon Kvale Stensland, Pål Halvorsen, Carsten Griwodz, Sigrun Losada Eskeland, and Thomas de Lange. “Efficient Processing of Videos in a Multi Auditory Environment Using Device Lending of GPUs.” In: Proceedings of the 7th ACM International Conference on Multimedia Systems. MMSys’16. May 2016, pp. 381–386. doi: 10.1145/2910017.2910636

- Jonas Markussen, Lars Bjørlykke Kristiansen, Håkon Kvale Stensland, Friedrich Seifert, Carsten Griwodz, and Pål Halvorsen. “Flexible Device Sharing in PCIe Clusters Using Device Lending.” In: Proceedings of the 47th ACM International Conference on Parallel Processing Companion. ICPP’18 Comp. August 2018, 48:1–48:10. doi: 10.1145/3229710.3229759

- Jonas Markussen, Lars Bjørlykke Kristiansen, Rune Johan Borgli, Håkon Kvale Stensland, Friedrich Seifert, Michael Riegler, Carsten Griwodz, and Pål Halvorsen. “Flexible Device Compositions and Dynamic Resource Sharing in PCIe Interconnected Clusters using Device Lending.” In: Cluster Computing vol. 23, no. 2 (June 2020), pp. 1211–1234. issn: 1573-7543. doi: 10.1007/s10586- 019-02988-0

Team

Jonas Sæther Markussen, Håkon Kvale, Carsten Griwodz, Pål Halvorsen

Partners

- Department of Informatics of UiO

- Dolphin Interconnect Solutions

- Simula Research Laboratory

Acknowledgements

This work was supported by Department of Informatics-University of Oslo, Simula Research Laboratory and Dolphin Interconnect Solutions.