SIndAIS4: Scaling Industrial AI with Semantics

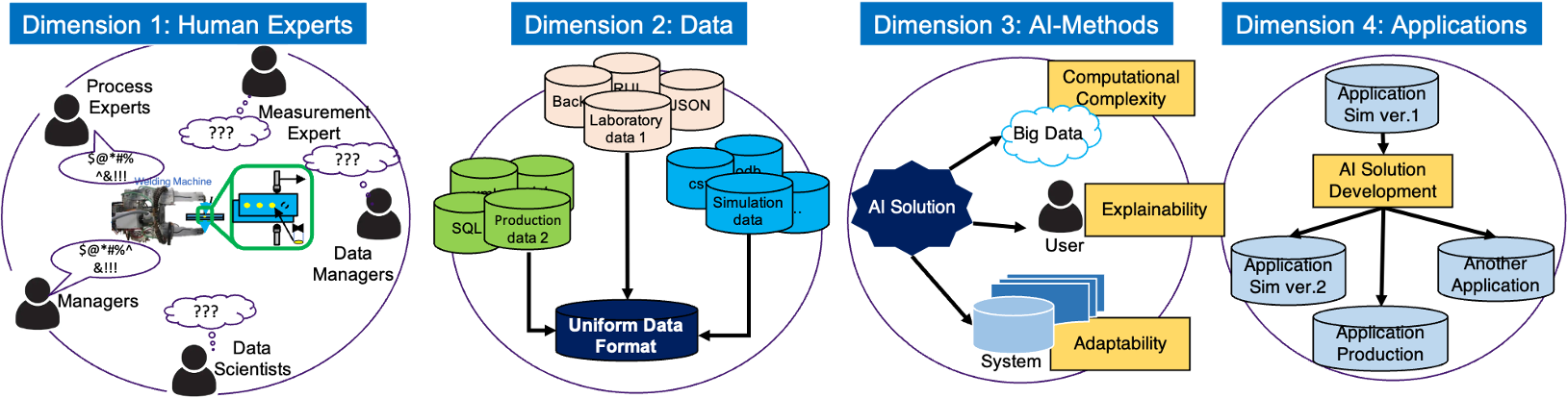

Technology advances in Industry 4.0 and Internet of Things have brought us in a new age, where manufacturing, engineering, logistics, etc. undergo the trend of digitalisation that aims at highly automated and interconnected systems. A series of profound changes towards omniscient sensoring, ubiquitous connecting, decentralised and intelligent information processing, and efficient actuation raise industrial as well as research challenges that need to be addressed by Artificial Intelligence (AI). However, the nature of AI is multi-disciplinary and demanding which impedes its wide-spread and adoption in industry. Bosch and UiO address these challenges and jointly drive an exciting SIRIUS project SIndAIS4 – Scaling Industrial AI in 4 dimensions by resorting to Semantic technologies – that aims at development of infrastructures, technologies, and tools for industrial AI, and at lowering the bar for users to learn and deploy AI solutions, and at scaling such solutions for industry. In particular, we aim at developing methods and system that allow to scaling in four following dimensions:

- [D1] Humans: allow more people to use and develop AI solutions in industry. This is vital to ensure and scale practice and use of AI methods in industry.

- [D2] Data: allow the four Vs of big industrial data – volume, variety, velocity and veracity – to be considered. This is vital to ensure that AI solutions scale to support real industrial data.

- [D3] Methods: allow more AI methods to be systematically organised and automatically selected and deployed on industrial applications – this is vital to scale the complexity of AI solutions.

- [D4] Applications: allow to reuse AI solutions across industrial assets and application scenarios. This is vital to ensure and scale a wide reuse of already developed AI methods.

We now introduce two use cases that will allow us to exemplify our research in SIndAIS4.

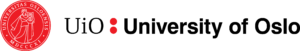

Use Case 1: Quality Monitoring of Automated Welding at Bosch

Resistance Spot Welding (RSW) is fully automated welding process, widely applied in automotive industry for e.g., car body production (see figure). During this process two welding electrodes press the metal worksheets with force. Then, a high electric current will flow from one electrode, through the worksheets, to the other electrode, generating a large amount of heat due to electric resistance. The materials in a small area between the two electrodes, called the welding spot, will melt, and form a weld nugget connecting the worksheets.

A car can have up to 6000 welding spots. The quality failure on one spot can cause the entire production line to stop, which means loss of maintenance time, effort, cost and several cars. Image the number of cars produced every day, we can postulate the impact of this process.

The diameter of a welding spot is typically used as the quality indicator of a single welding operation according to industrial standards, but it is difficult to measure precisely. Customary practice is to destroy the welded worksheets (car bodies in automotive industry), which is extremely expensive, and can only be performed with very few samples. Non-destructive methods, including ultrasonic wave and X-ray, are also costly, time-consuming and yield imprecise results.

Schematic Illustration of a Welding Machine and Worksheets

Bosch is developing data-driven quality monitoring solutions for the welding process, which involves data acquisition from process development and production, defining concrete quality monitoring tasks, data preparation, data analysis and model deployment in production plants. The data solutions can allow convenient visualisation, quality prediction, process optimisation, etc. The aim is to reduce quality failure, reduce destroyed car bodies, improve quality of welded spots.

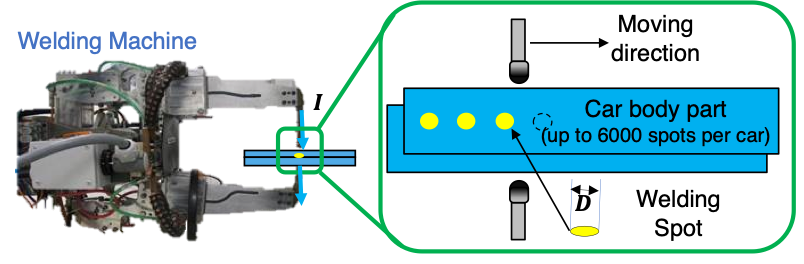

Use Case 2: Data Management of Offshore Oil and Gas Facilities

The main task of exploration geologists in oil companies is to find exploitable deposits of oil or gas in given areas and to analyse existing deposits. This is typically done by investigating how parts of the earth crust are composed in the area of interest. In the figure we show an area of investigation around two platforms located offshore, where the earth’s crust under the platforms has several rock layers. The earth’s crust can be analysed indirectly with seismic investigations and more directly by collecting rock samples and so-called log curves from drilled well-bores. In the former case the analyses are conducted by recording the time of echoes of small explosive charges that are red repeatedly over the area of interest and using this information to estimate the geological composition of the ground below. In the latter case the analysis is done over rock samples that are taken both during and after drilling and through measurements collected from sensors installed along the wellbore.

By combining information from wellbores, seismic investigations, and general geological knowledge, geologists can, for example, assess what types of rock are in the reservoir and intersected along the wellbore. Moreover, geologists can predict of Oil and Gas reservoir properties such as completion performance, pressure and saturation changes as well as the crude oil production and quality. Geologists do this typically in two steps:

- find relevant wellbore, seismic, and other data in databases

- analyse these data with specialised analytical tools and Machine Learning methods.

Schematic Illustration of Petroleum Reservoirs

It has been observed both in many companies that step (1) is the most time-consuming part of the process. The reason is that, on the one hand, the size and complexity of the data sources and access methods make it impossible for end-users to collect the necessary data efficiently. On the other hand, involvement of IT staff that can implement data gathering queries makes the process lengthy. Companies are exploring semantic approaches to improve these processes and to facilitate both data integration and analytics or learning over the integrated data in the Oil and Gas domain.

Connecting Use Cases to SIndAIS4

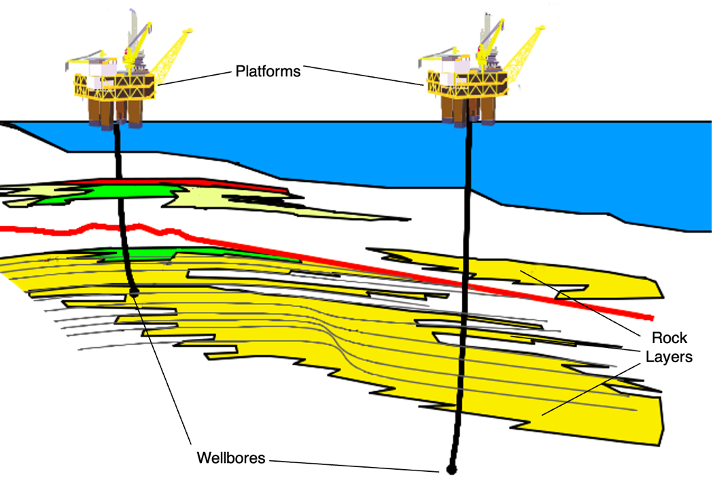

As we observed above in the discussion of the use cases, the development of data-driven Artificial Intelligence solutions is a complex and costly process. In both use cases the development follows a similar of six-steps procedure that we now explain in the following figure. These steps will help us in exemplification of the four SIndAIS4 dimensions.

Development Procedure for AI Solutions in Industry

The development procedure is iterative and includes data acquisition (Step 1), task negotiation (Step 2), data preparation (Step 3), data analysis (Step 4), result interpretation (Step 5), and finally, model deployment (Step 6). In the above figure we annotate Steps 2-6 with the D1-D4 dimensions, which are of high importance. We now give more detailed context on how these four dimensions are reflected in the use cases.

[D1] is knowledge communication between human experts: Steps 2 and 5 require collaborative work of experts from different areas. The asymmetric knowledge backgrounds, including complexity of data and knowledge in different domains and sophistication of ML algorithms that constrain the transparency of data solution results and models, make the communication time-consuming and error-prone. In Use Case 1, these human experts include welding engineers (process experts), material scientists, measurement experts, data managers, data scientists, and managers. In Use Case 2, these human experts include petroleum engineers (process experts), geologists, data managers, data scientists and managers.

[D2] is data preparation: Step 3 requires to integrate data from different sources and this is a labour-intensive effort that requires necessary understanding of multi-faceted domain knowledge and plentiful data complications. In Use Case 1, the different data sources include simulation data and laboratory data from process development phase, production data from different car factories at Bosch and many Bosch’s renowned customers. In Use Case 2, the different data sources include simulation data from process development phase and production data from many oil wells and offshore platforms.

[D3] is scalability of AI methods-based data solutions: many AI-methods are developed based on selected small datasets. Some of them may not apply to big data problem due to computational complexity of models, laborious effort for system adaptability, and complications for user training and explainability. It is therefore important to allow automatic selection, configuration, optimisation of these AI methods, hiding the complexity from users, to allow more AI methods applicable in industrial scenarios. In Use Case 1, these different AI methods include various machine learning methods with domain-knowledge supported feature engineering, e.g. linear regression, neural networks, support vector regression. In Use Case 2, these AI methods include symbolic reasoning in ontology-based data access, search through the knowledge graph and learning over sensor data.

[D4] is generalisability over applications: each data solution developed in Step 4 is typically tailored to a specific dataset and one scenario and thus its reuse for other data or scenarios, which is often needed, requires a significant effort. In Use Case 1, the application scenario for AI solution development can be one resistance spot welding (RSW) production line. The solution developed on this scenario needs to be generalised to other production lines of RSW, to another application scenario of process development, and to applications of other welding process, e.g., Hot-Staking, ultrasonic welding. In Use Case 2, the application scenario for AI solution development can be one oil platform on one reservoir. The solution developed on this scenario needs to be generalised to other oil platforms on other reservoirs.

The following figures and videos give further details on the four dimensions and how we addressed some of them.

Four Dimensions of Scalability of Artificial Intelligence Solutions

Research Topics

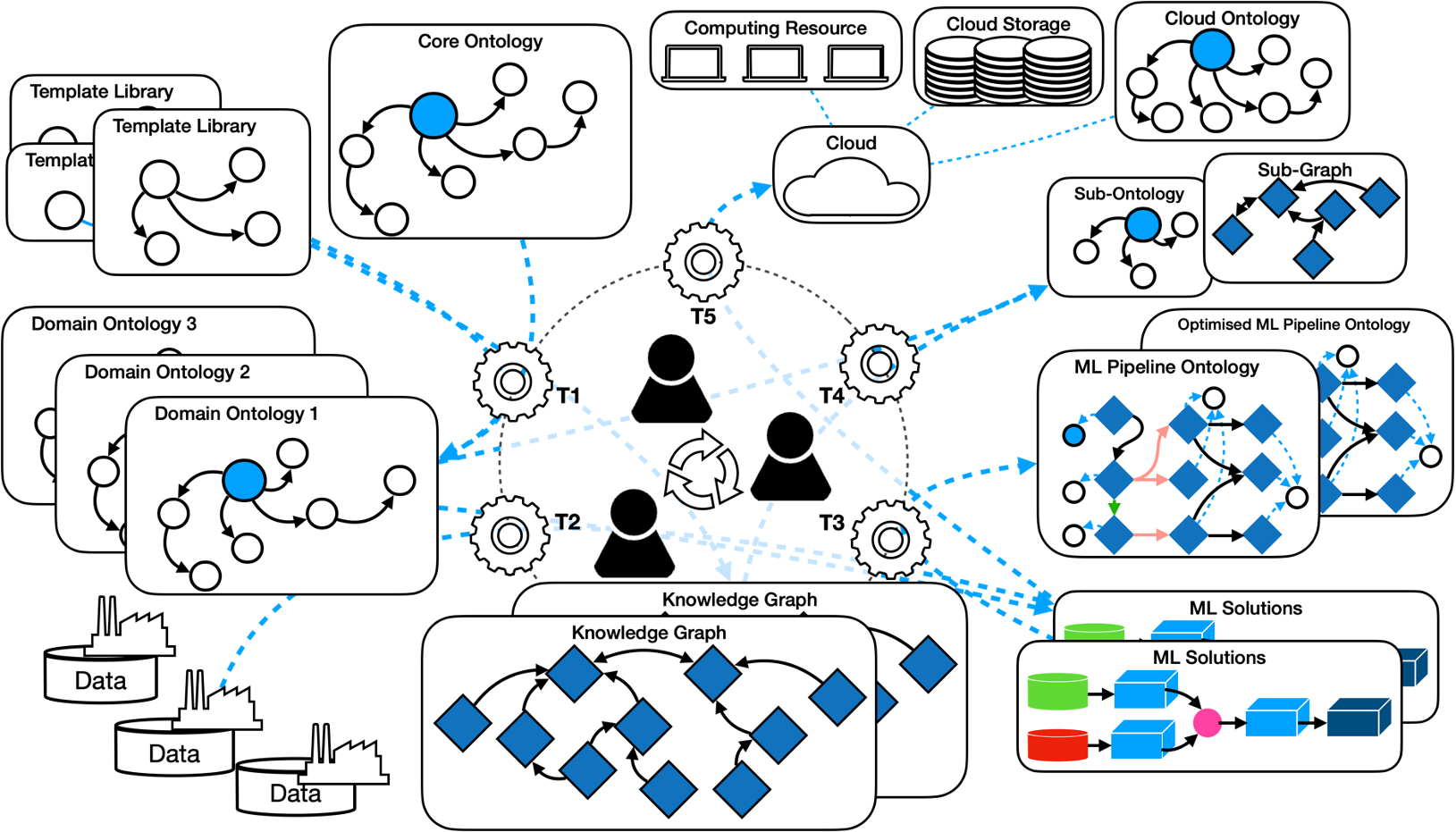

Our research combines semantic technologies and machine learning and encompasses five topics. We will now introduce these topics and then relate them to the four dimensions D1-D4 discussed earlier.

- [T1] Knowledge-aware data management: knowledge-aware data access and management enabled by user-created domain ontologies or semantic digital twins and transformation of data to and back from knowledge graph

- [T2] User-friendly machine learning: users with limited training in machine learning will be able to practice machine learning with a user-friendly GUIs, obviating the need of diving into the scripts level.

- [T3] Automating machine learning: automatic learning and construction of machine learning pipelines are enabled by encoding machine learning knowledge and solution space into ontologies, and by smart neuro-symbolic reasoners that search and optimise through the solution space.

- [T4] Neuro-symbolic knowledge analytics: extracting modules and patterns from knowledge graphs using neural networks and symbolic reasoning

- [T5] Scalable machine learning on the cloud: scaling machine learning pipelines on the cloud with a semantic abstraction level that mediates between the users, data, machine learning scripts, and cloud resources.

These topics can be related to the dimensions as in the following table:

| T1 | T2 | T3 | T4 | T5 | |

| D1 Human Experts | × | × | × | × | × |

| D2 Data | × | × | × | ||

| D3 AI-Methods | × | × | × | ||

| D4 Applications | × | × | × | × | × |

In the table, T1 provides convenient and uniform data access system for the users across many applications, which covers D1, D2, and D4. Then, T2 allows a transparent way for users with little training of AI to use complex AI-methods on different applications, thus covering D1, D3, and D4. Next, T3 automates the selection, configuration and optimisation of AI-methods, allowing more users using broader range of AI-methods on different applications, thus covering D1, D3, and D4. Besides, T4 relies on neuro-symbolic methods to improve unified data management, data visualisation, knowledge understanding and organisation for users across various applications, thus covering D1, D2 and D4. Finally, T5 scales machine learning methods to the big data level on the cloud and provides users simplistic interface to manipulate cloud resources, thus covering D1, D2, D3 and D4.

We aggregate all topics in overview diagram in the following figure, that illustrates how humans are in the centre of an AI-powered process of going from data to knowledge and how this process is facilitated with semantics along the five research topics that we address in SIndAIS4. The aim of the diagram is to connect the dots and to put our five research topics together, while the details on these topics will be given next.

Overview of the Five Research Topics in SIndAIS4

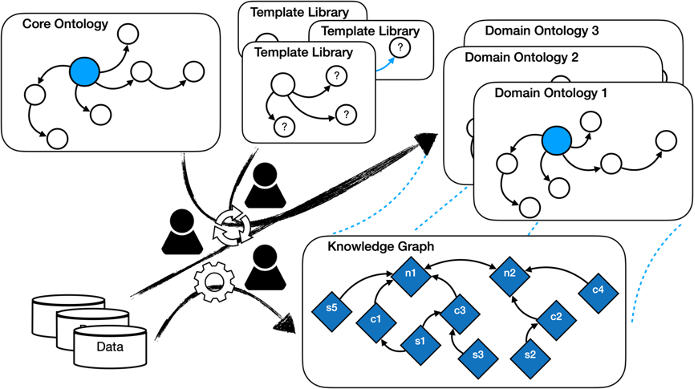

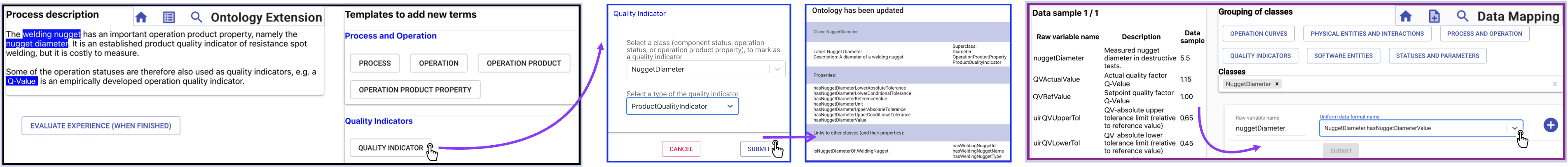

Topic 1: Knowledge-Aware Data Management

We are conducting research on methods and systems with Graphic User Interface (GUI) that allow users to manage data easily with consideration of knowledge about the data. The methods and systems should include the following aspects:

- Knowledge Encoding allows users to create domain ontologies conveniently based on the core ontology and template libraries. This requires that users have little knowledge of, or training in, semantic technologies.

- Knowledge Annotation enables users to link the data with the knowledge (domain ontologies) encoded by the users themselves.

- Data Conversion supports conversion of data between multiple formats that are connected to knowledge, for example, generating knowledge graph on the fly.

Schematic Illustration of the Data, Knowledge, and Ontologies

Graphical User Interface for Knowledge-Aware Data management

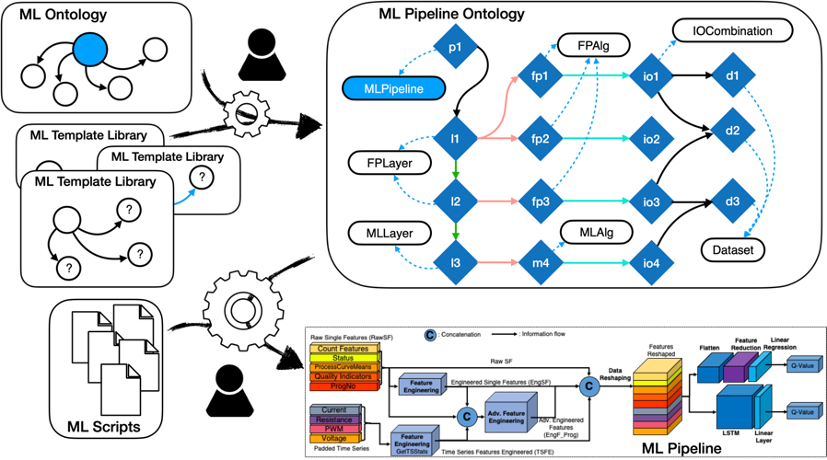

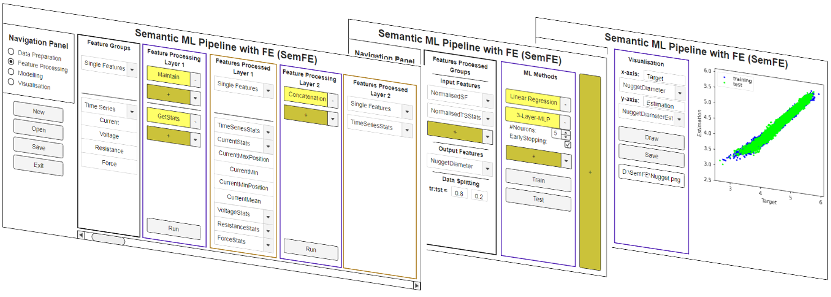

Topic 2: User-Friendly Machine Learning

Machine learning development is a complex process that requires long-time ML training and in-depth understanding of the domain and data, for which ML solutions need to be developed. Methods and systems for user-friendly machine learning will allow users with little training in ML to use, modify or even create practical ML solutions. In our research we address the following aspects:

ML Knowledge Visualisation and Extension

- Static Mode: It allows users to semantically find, visualise and inspect existing ML pipelines and select the one or more from the ML pipeline catalogue to deploy them on the data. This requires the users little or no training of ML expertise.

- Dynamic Mode: If the users are more familiar with ML knowledge, they can modify, extend or even create ML pipelines by relying on ontologies.

ML Library and Knowledge Encoding

This allows advanced users to extend the systems with new ML modules and encode these new ML modules (ML scripts) and new ML pipelines with semantically powered ML template libraries into the ML pipeline catalogue.

ML Pipeline Construction

This allows automatic construction of ML pipelines i.e. translating ML pipeline knowledge encoded in ontologies and knowledge graphs to functioning ML pipelines, by linking ML modules with the encoded ML pipelines.

Schematic Illustration of the System Architecture of User-Friendly Machine Learning

Graphical User Interface of the System of User-Friendly Machine Learning

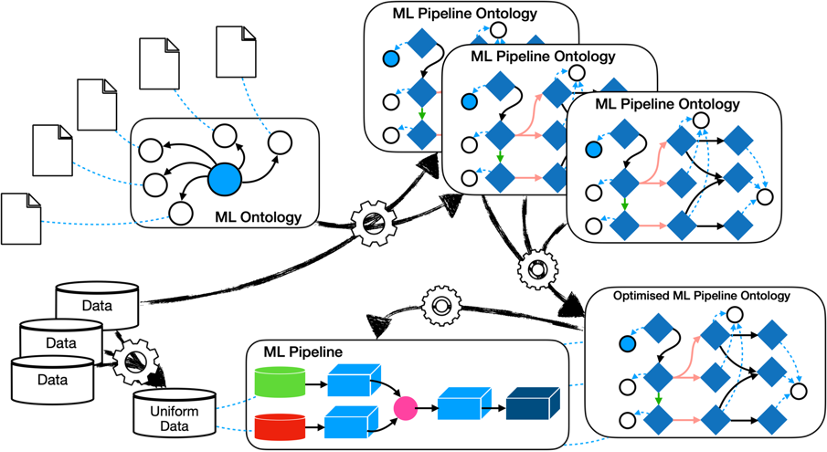

Topic 3: Automated Machine Learning

The encoding of ML knowledge and ML solutions with formal languages defines the allowed room of ML solutions and enables automatic search and generation of new ML solutions depending on data, domain, and performance requirements. It has the following aspects:

Formal Description of Machine Learning

The best language, practice of encoding general ML knowledge and ML knowledge of specific solutions as ontologies and Knowledge Graphs should be studied, which should allow convenient, flexible, consistent, comprehensive encoding

Automatic Reasoning of New ML Solutions

Based on the formal semantic description of ML solutions, a reasoner will check the consistency and correctness of the existing ML knowledge encoding and optimise the existing ML solutions. Furthermore, new ML solutions can be created by reasoning over all the constraints defined by data, domain and performance requirements.

Schematic Illustration of the System of Automated Machine Learning

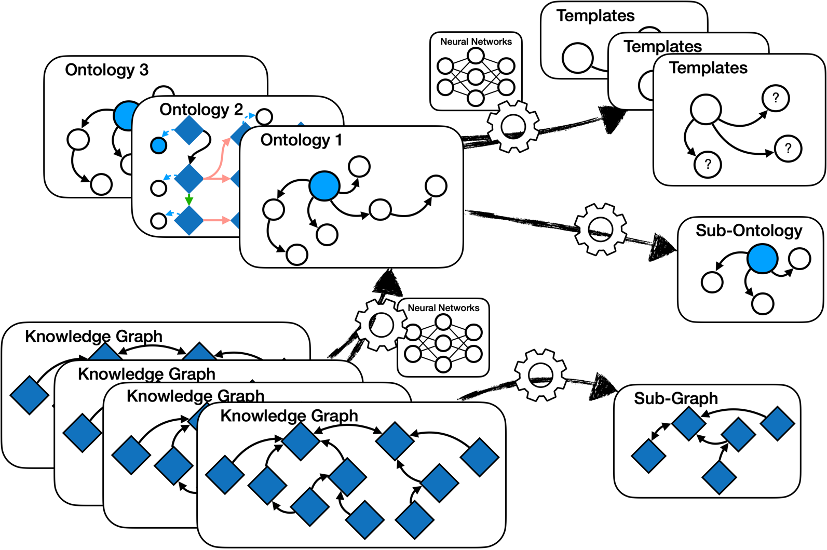

Topic 4: Neuro-Symbolic Knowledge Analytics

Industrial context offers a vast amount of unstructured and structured data, formal descriptions of knowledge, as well as patterns of knowledge. Neuro-Symbolic methods allow one to combine learning over the heterogenous data with the symbolic background knowledge of various kinds. In this scope we do research on [7-12]:

- Learning Ontologies from data (e.g., RDF triples) and learning Templates from ontologies, e.g., by relying on supervised or un-supervised learning, knowledge embedding.

- Extracting and summarising sub-graphs by relying on users’ interests, queries.

- Summarising ontologies with respect to users’ interests, relying on ontology modularity, forgetting, computing justifications.

Schematic Illustration of the System of Neuro-Symbolic Knowledge Analytics

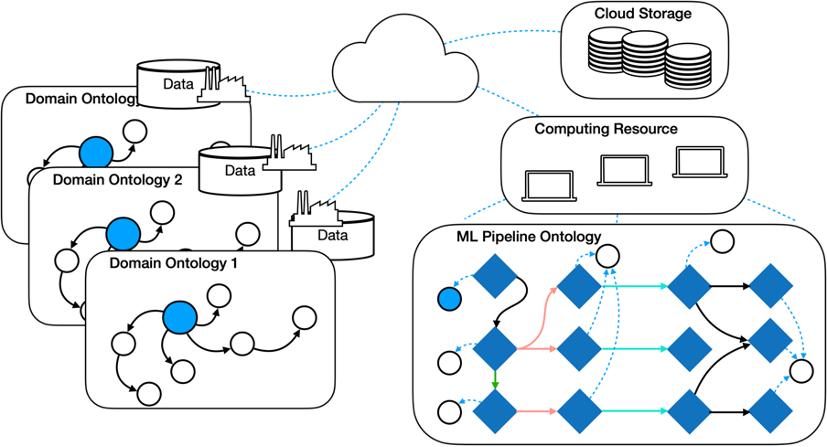

Topic 5: Scalable Machine Learning on the Cloud

Scalable ML on the cloud brings ML solutions on the cloud level that can handle data analysis of big data. In particular, our research addresses the following aspects:

Semantic Abstraction

This mediates between the users and the system, including data, domain knowledge (encoded in domain ontologies), ML modules and knowledge and cloud resources (e.g., storage and computing resources), allowing users to conveniently access all system components on the cloud level

Reasoning About the Cloud

That should automatically compute the system requirements configurations, minimising users’ effort in ML deployment on the cloud

Schematic Illustration of the System of Scalable Machine Learning on the Cloud

Selected Publications for Further Reading

[1] Baifan Zhou*, Yulia Svetashova*, Andre Gusmao, Ahmet Soyluf,Gong Cheng, Ralf Mikut, Arild Waaler, Evgeny Kharlamov. “SemML: Facilitating Development of ML Models for Condition Monitoring with Semantics.” under review at Journal of Web Semantics. *: equal contribution

[2] Baifan Zhou, Yulia Svetashova, Seongsu Byeon, Tim Pychynski, Ralf Mikut, Evgeny Kharlamov: “Predicting Quality of Automated Welding with Machine Learning and Semantics: A Bosch Case Study“. CIKM 2020: 2933-2940

[3] Baifan Zhou, Yulia Svetashova, Tim Pychynski, and Evgeny Kharlamov. “Semantic ML for Manufacturing Monitoring at Bosch.” ISWC (industry) 2020

[4] Baifan Zhou, Yulia Svetashova, Tim Pychynski, Ildar Baimuratov, Ahmet Soylu and Evgeny Kharlamov. “SemFE: Facilitating ML Pipeline Development with Semantics.” CIKM (demo) 2020

[5] Yulia Svetashova*, Baifan Zhou*, Tim Pychynski, Stefan Schmidt, York Sure-Vetter, Ralf Mikut, Evgeny Kharlamov: “Ontology-Enhanced Machine Learning: A Bosch Use Case of Welding Quality Monitoring“. ISWC (2) 2020: 531-550 *: equal contribution

[6] Yulia Svetashova, Baifan Zhou, Stefan Schmid, Tim Pychynski, and Evgeny Kharlamov. “SemML: Reusable ML for Condition Monitoring in Discrete Manufacturing.” ISWC (demo) 2020.

[7] Qingxia Liu, Yue Chen, Gong Cheng, Evgeny Kharlamov, Junyou Li, Yuzhong Qu. “Entity Summarization with User Feedback“. ESWC 2020: 376-392

[8] Junyou Li, Gong Cheng, Qingxia Liu, Wen Zhang, Evgeny Kharlamov, Kalpa Gunaratna, Huajun Chen. “Neural Entity Summarization with Joint Encoding and Weak Supervision“. IJCAI 2020: 1644-1650

[9] Shuxin Li, Zixian Huang, Gong Cheng, Evgeny Kharlamov, Kalpa Gunaratna. “Enriching Documents with Compact, Representative, Relevant Knowledge Graphs.” IJCAI 2020: 1748-1754

[10] Wenzheng Feng, Jie Zhang, Yuxiao Dong, Yu Han, Huanbo Luan, Qian Xu, Qiang Yang, Evgeny Kharlamov, Jie Tang. “Graph Random Neural Networks for Semi-Supervised Learning on Graphs“. NeurIPS 2020

[11] Yuxuan Shi, Gong Cheng, Evgeny Kharlamov. “Keyword Search over Knowledge Graphs via Static and Dynamic Hub Labelings”. WWW 2020: 235-245

[12] Kharlamov, Evgeny, Dag Hovland, Martin G. Skjæveland, Dimitris Bilidas, Ernesto Jiménez-Ruiz, Guohui Xiao, Ahmet Soylu et al. “Ontology based data access in Statoil.” Journal of Web Semantics, vol.44, pp.3-36 (2017)

Contact

Collaborating Organizations

SINTEF, Norway

Nanjing University, China

Free University of Bozen-Bolzano, Italy

Oslo Metropolitan University, Norway

Norwegian University of Science and Technology, Norway

ITMO University, Russia

Researchers

Jieying Chen, University of Oslo

Gong Cheng, Nanjing University

Ognjen Savkovic , Free University of Bozen-Bolzano

Ahmet Soylu, Oslo Metropolitan University

Titi Roman, SINTEF

Nikolay Nikolov, SINTEF

Ildar Baimuratov, ITMO University